A Cortex in the Crater

The case for building a Lunar brain

Risk & Progress explores risk, human progress, and your potential. My mission is to educate, inspire, and invest in concepts that promote a better future for all. Subscriptions are free. Paid subscribers gain access to the full archive and Pathways of Progress.

It is the year 2053. In a visit with your “doctor,” an AI app on your phone, you are diagnosed with a rare form of leukemia. After your initial surprise, your “doctor” assures you that all will be ok. After a consultation with the Lunar Crater Cortex, what would have been a terminal illness a few years ago is now little more than an inconvenience. This superintelligent, Lunar-based AI will design and prescribe a cure, tailor-made for you and your disease. Reassured, you go about your day, in full comfort that science and knowledge saved your life. While this scenario is science fiction today, it may become reality sooner than you think.

After all, our medical breakthroughs, from the ability to transplant organs, the discovery of antibiotics, and machines that can literally see through the skin, would have appeared to be science fiction to our ancestors. Throughout our exploration of human achievement, I have maintained that all progress is rooted in two fundamental elements: energy and knowledge. Energy is required to do things, and the universe demands that we increase entropy by dissipating it. Knowledge, encoded into physical matter, is how we carry out this mission. The survival and flourishing of our species depend on our ability to increase both energy capture and knowledge discovery. Artificial Intelligence holds the potential to accelerate both.

Over the last few years, as researchers began deploying, scaling, and testing larger and more intelligent AI systems, “laws” have emerged. These “AI scaling” laws hold that, if we aim to build a superintelligent AI, the kind that can rapidly increase the total stock of human knowledge, we need to scale up the model size, the total training data, and raw computational power. When we upsize each of these three variables, error rates trend downward, and the AI’s problem-solving capacity increases in a predictable way. This is why so many companies are investing heavily, some betting it all, on multi-billion dollar data centers with hundreds of thousands of GPUs: they have a good idea what will come out the other end, and the promise is worth the risk.

The challenge for humanity doesn’t lie with scaling AI compute; it lies with energy. Chips and algorithms, to be sure, will become more efficient over time, but they will still require an immense amount of energy to train and run superintelligent AIs. Already, large data centers have energy requirements comparable to those of an entire town. If AI scaling laws hold, as we expect them to, and AIs can solve the problems we hope they will, this will come at the cost of rising energy demand. We might expect, for example, total energy demand to double or quadruple every decade from here on out. Obviously, this is a huge problem, one that we are unprepared for.

Not only is our Energy Return on Investment declining, but we also still face the challenge of increasing concentrations of greenhouse gases in our atmosphere. Even if, however, we assume unlimited energy constraints and that climate change is a solvable problem, we still have an issue: our old friends the laws of thermodynamics. As we discussed earlier, all life runs in service of entropy, the universe’s natural need to dissipate energy. Every action we take creates a small amount of waste heat. Today, this wasted heat energy is so insignificant that we barely notice it. We don’t, for example, consider the global impact of the heat generated by turning on a phone or laptop, but it’s there. Already, some 2 percent of global warming can be attributed, not to our emissions of greenhouse gases, but to the waste heat of our machines. A world tiled with AI data centers would scale this problem up very quickly.

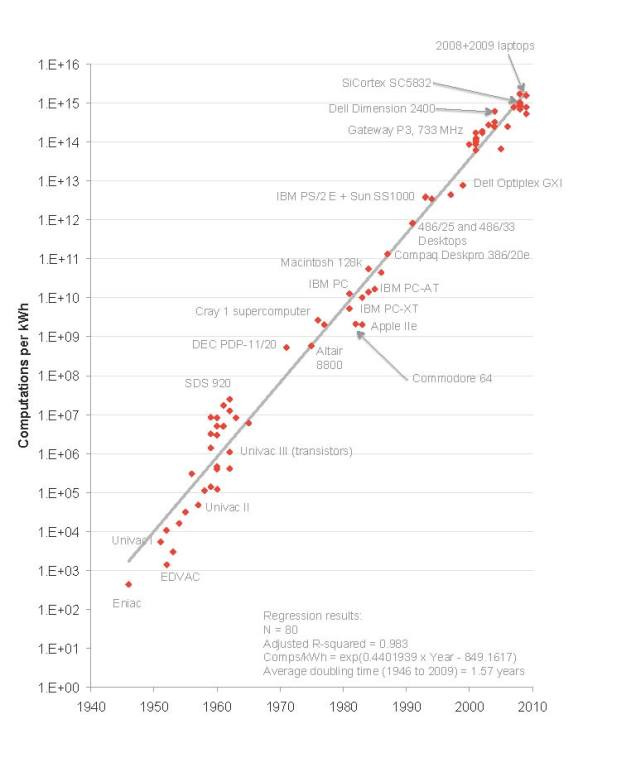

To be sure, our computers are becoming more thermally efficient over time. In 2010, researcher Jonathan Koomey noticed what has come to be known as “Koomey’s Law” or the “energy Moore’s Law.” He noted that from the 1940s onward, the number of computations that can be executed per joule (a unit of energy) doubles every 1.57 years. This means that about every year and a half, your computer could handle twice as much work using the same energy. This is why, for example, your smartphone, a “supercomputer” in your pocket, can run for an entire day on a single battery charge. It’s also why it doesn’t burn your skin when you use it.

Unlike the laws of physics, however, Koomey’s law is empirical; it is based purely on observations, and it is slowing down. Today, the interval between computations per joule is closer to 2–2.5 years. This slowdown is closely related to the decline in Moore’s Law. We’re building transistors so small that we are approaching the limits of physics, and eventually, we will reach a point where we can no longer improve thermal efficiency. This barrier, known as Landauer’s limit, is a rule from thermodynamics that states that to erase or flip one bit of information (a 0 to 1 transition in computing), a minimum of 0.000000000000000000003 joules per bit must be wasted at room temperature. In other words, physics remains the law; we cannot cheat entropy. We are predicted to reach Landauer’s Limit by 2080. Admittedly, this wasted heat energy is incredibly tiny, but inevitably, millions upon millions of GPUs will heat the Earth. Taken to an extreme, it could, together with the greenhouse effect, render Earth inhospitable to life.

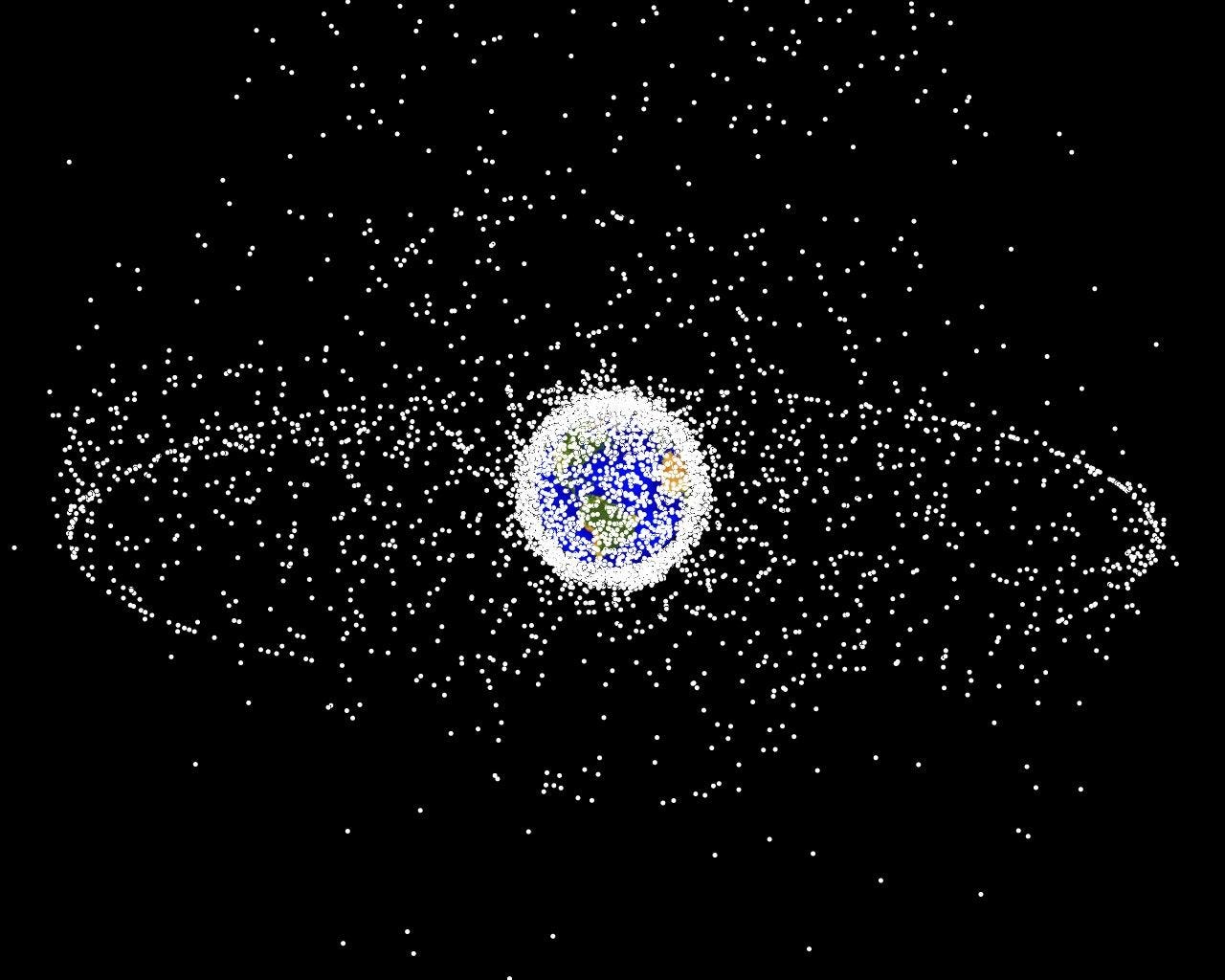

Some innovators are looking for solutions in the heavens, suggesting that we place our data centers into Earth orbit. SpaceX, for example, already has thousands of laser-linked communication satellites orbiting the planet. Perhaps these could be upgraded to carry GPUs and function as a distributed global data center. Here, too, however, we will eventually run into scaling limits due to the Kessler Syndrome, where the density of orbital objects becomes so high that collisions between them can create an uncontrollable cascade followed by a perilous, semi-permanent orbital debris field. This would trap humanity on Earth for generations. Not a good outcome.

Lunar Lunacy

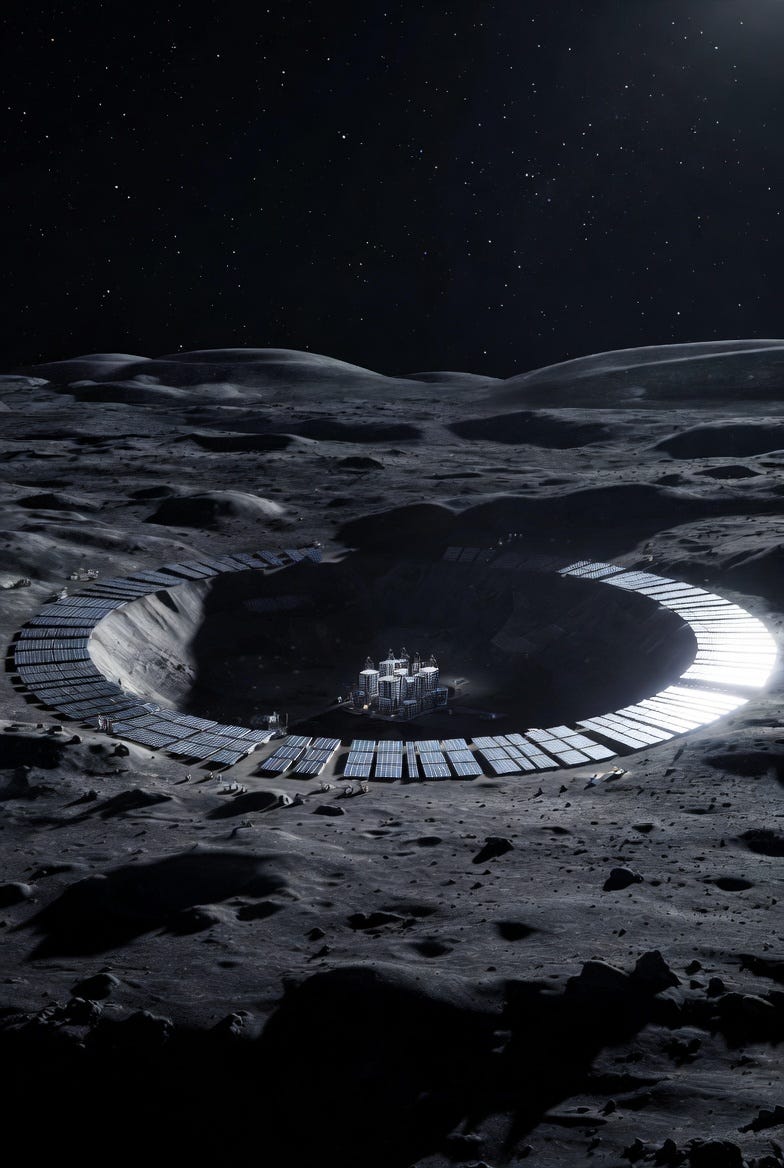

Luckily for humanity, Earth is blessed by the presence of our Moon. The Moon has long been seen as a potential proving ground for human space exploration technology and even a proverbial filling station on the way to Mars or other distant locations in the Solar System. The business case for lunar colonization, however, has been suspect. The energy and heat challenges of AI datacenters may change this. The Moon has no atmosphere and some permanently shadowed craters where temperatures dip to close to absolute zero—the perfect place to radiate heat directly into the void of space. Not only could this solve the environmental challenge of waste heat, but it would also allow the data center to be smaller, lighter, cheaper, and reduce its total energy consumption. There would be no need for vast quantities of cooling water, piping, air conditioning systems, fans, pumps, etc. Estimates suggest that simpler radiative cooling would cut total energy use by up to 40 percent.

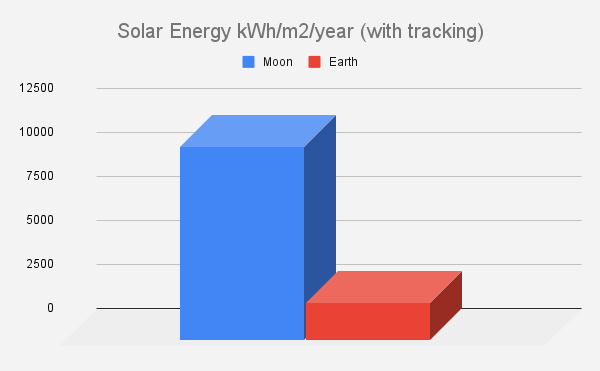

Reduced energy requirement dovetails with the second reason one might want to build data centers on the Moon: abundant solar energy. The Moon, absent clouds, storms, and an atmosphere, receives far more solar energy per hour of sunlight than Earth does. An Earth-based solar panel in an ideal location will receive approximately 1644 kWh/m²/year of solar energy. A Moon-based solar panel, however, receives approximately 3822 kWh/m²/year. In other words, static panels on the Moon receive over twice the energy for a given area. The advantages of the Moon become more extreme, however, if we place the panels at the Lunar poles, which are bathed in sunlight 80-95 percent of the year. Combined with solar tracking and orientation, panels located on the Shackleton Crater rim would receive approximately 10,770-11,500 kWh/m²/year. A comparable solar panel on Earth, also using tracking, is limited to approximately 2190 kWh/m²/year. In sum, Lunar-based solar could generate 4-5 times more annual energy with the same panel area.

Launch Costs

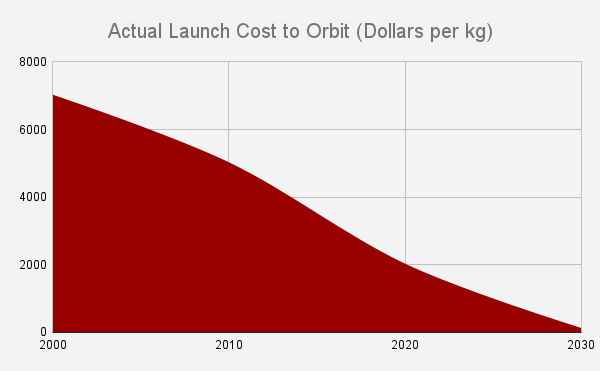

If you have made it this far, you probably are thinking: still, this doesn’t make any sense! The sheer cost of launching, landing, and constructing them on the Moon would seem to defy reasonable economics. Perhaps it does. We need to account for the fact, however, that the cost of launching payloads into space is falling fast. In the early 2010s, SpaceX’s Falcon 9 rocket demonstrated that it was possible to design, build, and launch orbital rockets at about half the cost of the stodgy space industry. They did this with innovative, first-principles approaches to rocket design and manufacture, bringing the cost of a rocket closer to that of its constituent raw materials. The rest of the industry is now mimicking their approach: Europe’s Ariane 6, Japan’s H3, and the ULA’s Vulcan, among others, are catching up.

Since then, however, SpaceX has proven the economics of partial rocket reuse. While hard data is not available, reuse of the Falcon 9 first stage, which amortizes the cost of building it over dozens of launches, could easily cut the cost of a space launch by another 50 percent or more. This is true even if we consider the payload penalties associated with reuse, including the addition of fins, fuel reserves, header tanks, heat-shielding, etc. Launch prices have not declined much yet, if for no other reason than that SpaceX has no competition in the reuse space. That’s changing. RocketLab’s Neutron, Blue Origin’s New Glenn, and China’s CZ-10 and Zhuque-3 hope to catch up before 2030. Competition will force launch prices down.

Still, these rockets are only partially reusable. They all expend the upper stage, throwing away millions of dollars of hardware on every flight. Second-stage reuse could bring launch costs down further. SpaceX Starship hopes that, combined with its large payload capacity, it will achieve an astonishing launch cost of less than $100/kg. Others are working on this problem too, including US-based Stoke Space and China’s CALT. While unproven, flight tests of Starship are promising. By the 2030s/2040s, the primary impediment to space access may be the cost of fuel, not the rocket. At which point, space tethers and other kinds of space-based infrastructure could realize even lower access costs.

What may sound like science fiction today could end up being an important turning point in the future history of the human species. Centuries ago, explorers and settlers from Europe travelled to the New World in search of riches, physical matter that they could extract from the land. Our descendants may travel too, this time to distant locations in the Solar System, not only in pursuit of resources, but in search of energy and new methods to accelerate the discovery of knowledge for the benefit of all.

You also may like: